In the WWDC 2017, Apple announced the ARKit, which is a new framework to embed the Augmented Reality technology. In this article, you’ll get a brief idea of what AR is and what is inside the ARKit.

What is AR?

AR (Augmented Reality) is a technology that put a visual object into the real world. Below is a simple AR Demo:

In the above image, the chair is actually a virtual object created by code and draw by GPU, while the background is the reality world captured by camera. This is exactly how AR works – to combine visual and reality together. Basically, there are a few components in one AR system:

- Capture Real World: the background in the above screenshot is actually the really world, normal a camera will be used to capture the reality;

- Virtual world: the red chair above is one virtual object. It is also possible to input a lot of visual objects to combine a complex virtual world;

- combine the virtual world with the real world: Rendering will handle this part;

- World Tracking: When the real world is changing (move the camera for instance) the virtual object (the chair)’s position shall be changed as well;

- Scene Understand: The chair above is actually put on the ground, which is detected by AR system;

- Hit-testing: Able to zoom in, zoom out, drag the chair.

The overall structure of an AR system would be:

ARKit Overview

ARKit is the framework Apple introduced in WWDC2017 for iOS AR development. The requirement to use ARKit is:

Software:

Xcode 9 and above;

iOS 11 and above;

MacOS 10.12.4 and above;

Hardware: iOS devices with A9 process and above (iPhone 6s and newer devices)

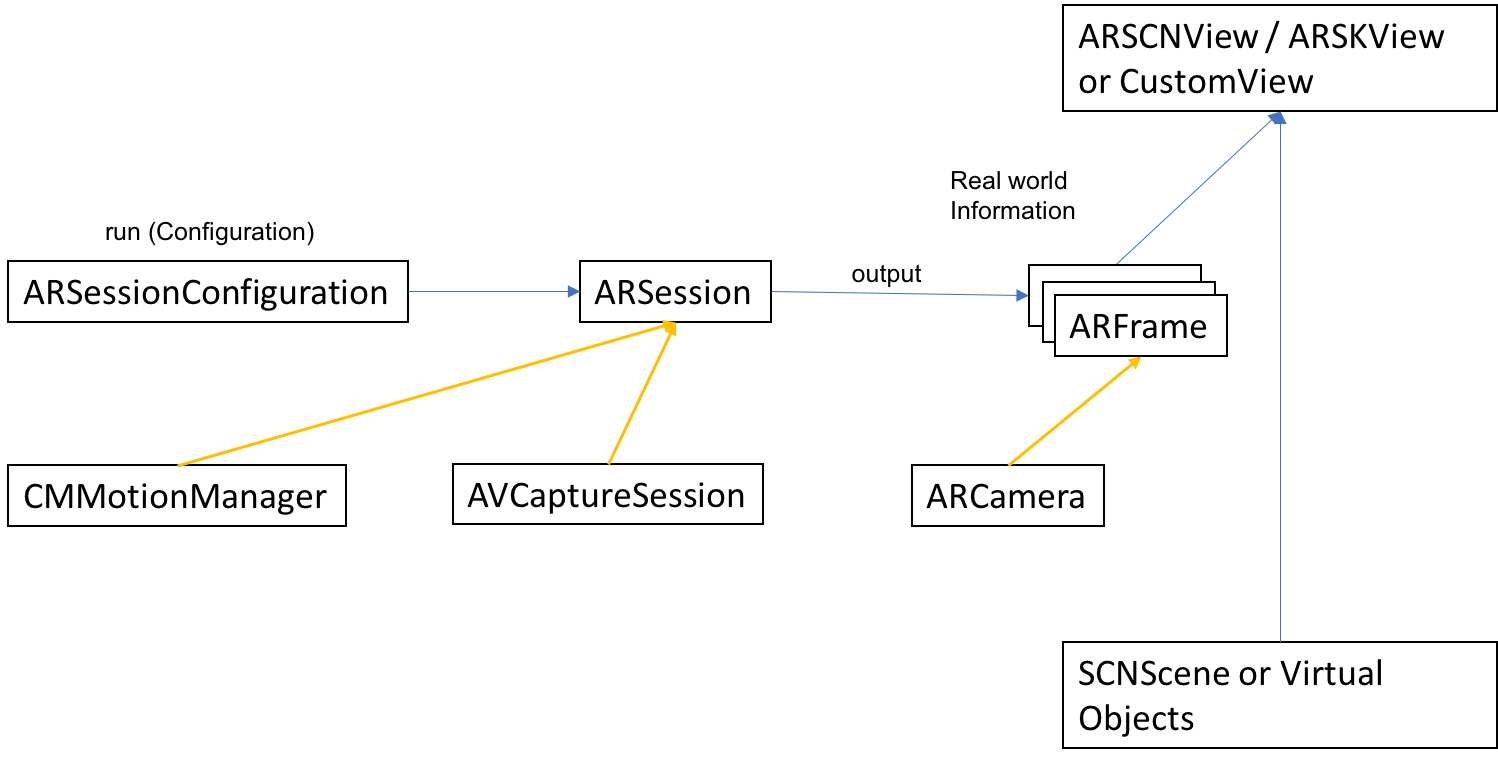

The architecture of the ARKit would be:

ARSession, ARSessionConfiguration, ARFrame, ARCamera are the main classes in ARKit API, and can be divided into World Tracking, Virtual World, Capture Real World, Scene Understanding, Rendering five different categories.

ARSession, ARSessionConfiguration, ARFrame, ARCamera are the main classes in ARKit API, and can be divided into World Tracking, Virtual World, Capture Real World, Scene Understanding, Rendering five different categories.

For the above figure, ARSession is the core of the entire ARKit system, which implements the World Tracking, Scene Understanding and other important functions.

Build the Virtual World

ARKit does not provide an engine to create a virtual world, but uses other 3D / 2D engines to handle the creation.

Engines can be used on the iOS system are:

- Apple 3D Framework – SceneKit.

- Apple 2D Framework – SpriteKit.

- Apple GPU-accelerated 3D graphics Engine – Metal.

- OpenGl

- Unity3D

- Unreal Engine

There is no explicitly requirement.

ARKit does not explicitly ask developers to build virtual worlds. Developers can use the output of ARKit, with world tracking and scene resolution information (existing in the ARFrame), rendering the virtual world created by the graphics engine into the real world. Addtionally, ARKit provides ARSCNView class, the class based on SceneKit 3D, to provide simple APIs to render a virtual world into the real world.

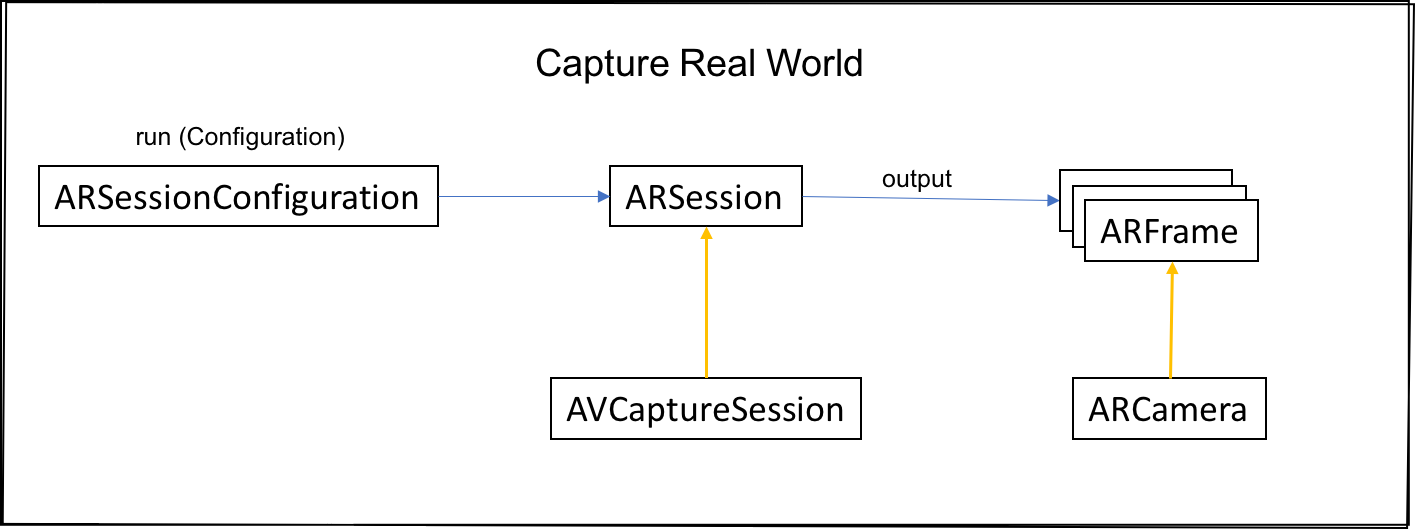

Capture Real World

Capturing the real world is to bring our real-world scene as the background of the ARKit display scene.

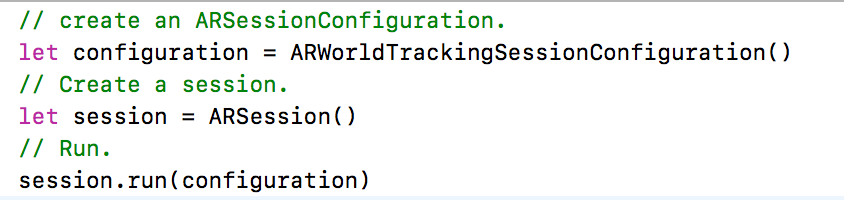

If we want to use ARKit, we have to create an ARSession object and run the ARSession.

From the above code, the process of running an ARSession is very simple. So how does the ARSession capture the real-world scene in a lower level?

- The ARSession uses the AVCaputreSession to capture the video captured by the camera (the sequence of images for one frame).

- The ARSession processes the acquired image sequence, and finally outputs the ARFrame, which contains all the information in the real-world scene.

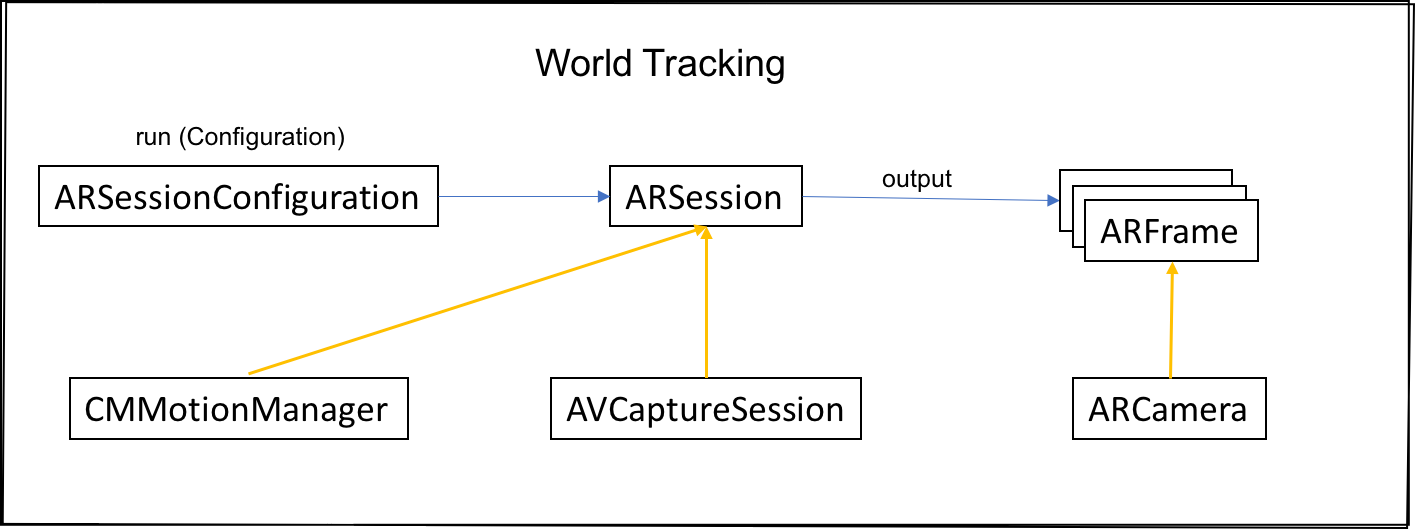

World Tracking

In the first part of the AR system introduced, we see the virtual chair is on the ground and can see different angles when moving. we can also move the chair. These functions cannot be achieved without the world tracking. World tracking is used to provide effective information for the combination of the real world and the virtual world so that we can see a more realistic virtual world in the real world.

Scene Understanding

The main function of scene understanding is to analyze the real-world scene, analyze the plane and other information, so that we can put some virtual objects in physical places. There are three kinds of scene understanding provided by ARKit, which are: plane detection, Hit-testing and light estimation. Those will be explained in the second part.