While there are many reasons to push data integration projects forward, healthcare organizations are often held back from using their data by incompatible formats, limitations of databases and systems, and the inability to combine data from multiple sources. This is why cloud-based data lakes have replaced the enterprise data warehouse (EDW) as the core of a modern healthcare data architecture.

Unlike a data warehouse, a data lake is a collection of all data types: structured, semi-structured, and unstructured. Data is stored in its raw format without the need for any structure or schema. In fact, data structure doesn’t need to be defined when being captured, only when being read. Because data lakes are highly scalable, you can support larger volumes of data at a cheaper price.

With a data lake, data can also be stored from relational sources (like databases) and from non-relational sources (IoT devices/machines, social media, etc.) without ETL (extract, transform, load), allowing data to be available for analysis much faster.

The enterprise data warehouse (EDW) as we know it is neither dead nor will it be any time soon. However, it’s no longer the centerpiece of an enterprise’s data architecture strategy. The EDW remains a mission-critical component in a company’s overall information technology architecture, but it should now be viewed as a “downstream application” — a destination, but not the center of your data universe.

Next Steps in Building a Modern Enterprise Data Architecture

The journey to building a modern enterprise data architecture can seem long and challenging, but with the right framework and principles, you can successfully make this transformation sooner than you think.

Data lakes are built intentionally to be flexible, expandable and connectivity to a variety of data sources and systems. By bringing together systems, we can load/extract, curate/transform and publish/load disparate data across the healthcare organization. When designed well, a data lake is an effective data-driven design pattern for capturing a wide range of data types, both old and new, at large scale. By definition, a data lake is optimized for the quick ingestion of raw, detailed source data plus on-the-fly processing of such data for exploration, analytics and operations.

Organizations are adopting the data lake design pattern (whether on Hadoop or a relational database) because lakes provision the kind of raw data that users need for data exploration and discovery-oriented forms of advanced analytics. A data lake can also be a consolidation point for both new and traditional data, thereby enabling analytics correlations across all data.

The Data Lake’s Strength leads to a Weakness.

A data lake will grow by leaps and bound by its very nature, if left attended. It can ingest data at a variety of speeds, from batch to real-time. Its unstructured data comes in all shapes and forms, leading to an accumulation of ‘noise’ or data without meaning or purpose. Garner’s Hype Cycle for 2017 reveals that the excitement of the data lake is revealing that there is a growing disillusionment with the unrealistic expectations.

Originally, data lakes were predicted to solve the problems with outcomes. Instead of solving them, data lakes became data swamps.

The Four Zones of a Data Lake

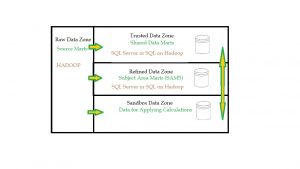

To get around the problem of the ‘Data Swamp’, we need to understand and create four zones within the data lake. Through the process of data governance, we can control the quality and quantity of the information we use and report on. Distinct data zones allow us to define, analyze, govern, archive and standardize our data to give it meaning and organization for a multitude of platforms and analytical systems.

To define these zones, the ebook Big Data Science and Advanced Analytics identifies a need to separate the physical data structures and systems from the logical models. By using servers and clusters, we can virtualize the data in a variety of configurations, feeding different downstream applications through the control and governance. Healthcare analytics depend on a reliable and consistent feed of information from the legacy and transactional systems, such as member eligibility, benefits and pricing, utilization provider networks and claims adjudication.

By creating unique zones, the data lake is able to consume the variety of incoming information, transform it and merge it with other cross walks of lookups, then feed it to analytical systems.

Data lakes are divided into four zones (Figure 1). Different healthcare organization may call them by different names but their functions are essentially the same.

- Raw Data Zone

- Trusted Data Zone

- Refined Data Zone

- Sandbox Data Zone

Raw Data Zone

In the Raw Data Zone, data is moved in its native format, without transformation or conversion. It is brought over ‘as is’. This ensure that the information is brought over without change. It allows the raw data to be seen as it was in its originally format from their source systems.

It is too complex for less technical users. Typically users are ETL developers, data scientists, who are able to derive new meaning and structure from their original values by sifting through the vastness of data. This new information is then pushed into other zones.

Trusted Data Zone

In the Trusted Data Zone, Source data is transformed and curated into meaningful information. It is prepared for common use, merged with other data, crosswalked and translated. Terminology is standardized by data governance rules.

Data dictionaries are created. This data becomes the building blocks that extend across the enterprise. Trusted Data such as claims, capitation, laboratory results, utilization, admissions, and member eligibility are the foundation to accurate underwriting and risk analysis.

Refined Data Zone

The Refined Data Zone is where Trusted Data is curated and published to be used in external Enterprise Data Warehouse and datamarts. Meaning is derived from raw data. It is integrated into a common format, refined and grouped into Subject Area Marts (SAM). SAM are used to analyze and complex inquiry.

SAM provide Executive leadership with analytical support for corporate decisions and complex. SAM are used for standardized ‘member per month’ and other operational support reporting.

SAM become the source of truth and the merging data from different original sources. They take subsets of data from a larger pool and add value and meaning that is useful to a finance, budgeting and forecasting, member and provider utilization, clinical, claims adjudication/payment and other administrative areas. Refined data is used by a broad group of people.

Refined data is promoted to the Trusted Zone after it has been confirmed and verified by data governance or subject matter experts.

Sandbox Data Zone

The Sandbox is also known as the ‘exploration’ zone. It is used for adhoc analysis and reporting. Users move data from the raw zone, trusted zone and refined zone here for private use. Once the data is verified, it can then be promoted for use in the refined data zone.

The Future of the Data Lakes

As the volume of healthcare data continues to grow, IT and enterprise architecture, and business governance must continue to review their platforms and their integrations. Hardware and software needs of the business guide the decisions being made to guide the healthcare organization’s vision and leadership into the future. We need to look at the use of the data, regulatory compliance, archiving and removal processes.

Data governance plays a critical role in determining what data needs are required in the data lake, and in each data zone. They must look at their business capabilities needed to perform their critical functions and protect their assets. Afterwards, analytics and its related business services can be built to support these capabilities and function.

For more information on the role of Data Lakes in healthcare, please refer to the Perficient White Paper The Role of Data Lakes in Healthcare

By Steven Vacca, Technical Analyst, Perficient

]]>Healthcare IT is ever-changing and Perficient is on the forefront of this change, guiding the industry and those we serve toward a brighter future. We partner with healthcare companies to help people live their lives to their fullest potential today, using best practices and cost saving technologies and processes.

As we look to the future of Healthcare Information Systems, the effectiveness of an organization is measured by four areas; the heart of who we are and do is all about the integration, accuracy, consistency and timeliness of health information.

Healthcare organizations are among the most complex forms of human organization ever attempted to be managed, making transformation a daunting task. Despite the challenges associated with change, organizations need to evolve into a data-driven outcomes improvement organization.

They aggregate tremendous amounts of data – they need to figure out how to use it to drive innovation, boost the quality of care outcomes, and cut costs.

Data Integration Challenges

Besides members and providers, as well as internal/external business partners and vendors, there are a multitude state and federal regulatory/compliance agencies that insist on having our information on a near real-time manner in order to perform their own functions and services. These integration requirements needs are constantly changing.

As an EDI Integration Specialist, I have seen many organizations struggle to constantly keep up with the business needs of their trading partners, state and federal agencies. Often, as our trading partners analyze the information we have sent them, they discover missing data or inconsistencies.

This requires a tedious and painful iterative remediation process to get the missing data, and results in resending massive amounts of historical data or correcting/retro-adjudicating claims. Adjusting and recouping claim payments is always painful for all entities involved, especially providers, with possible penalties or sanctions.

In the last few years, I have worked with several clients on getting their claims information loaded into their state’s All Payer Claims Databases (APCDB) and CMS to get their health claims reimbursed. We struggled to get the complete data set loaded successfully, and to meet the rigorous quality assurance standards.

It required several attempts working with their legacy systems to get the necessary data into the correct format. It required a great deal of coordination, testing and validation. Each state has a different submission format and data requirements, not necessarily an 837 EDI format, including one state that had a 220+ field delimited record format (Rhode Island).

We spent a great amount of time in compliance validation, and each submission required a manual effort. We constantly had to monitor each submission’s file acceptance status, handling original and adjusted claims differently using the previously accepted claim ID. If files were not submitted accurately and on a timely manner, there were significant fines imposed.

Several times we discovered that even though the files were successfully accepted, there were still missing information which need to be resubmitted. To be honest, it was a logistical nightmare.

As we design and develop data integrations, APIs and extracts, we often ‘shortcut’ to deliver data due to competing priorities, quickened project delivery schedules or limited development/testing staff. This leads to not giving our full attention to the complete requirements of the client/trading partners.

Companion guides and documentation are vague and say ‘send if known’, realizing several years later that these ‘shortcuts’ will be found out and possibly leading to penalties and corrective action plans. Sometimes legacy system and technical limitations lead to not having the complete record set that is required.

Limitations of electronic health record (EHR) system combined with variable levels of expertise in outcomes improvement impede the health system’s ability to transform.

In many healthcare organizations, information technology (IT) teams—including data architects and data analysts—and quality and clinical teams work in silos. IT provides the technologies, designs and delivers reports, without a clear understanding of the needs of the quality and clinical teams.

This can sometimes turn into a finger pointing exercise. Quality and clinical teams claim IT is not delivering the data they need to succeed, while IT insists that others are not clearly articulating what they need. It takes clear-eyed analysis to see that the teams are failing to work together to prioritize their outcomes improvement initiatives and drive sustainable outcomes.

How Can Health Care/System Redesign Be Put Into Action?

At Perficient, we can provide a comprehensive picture of your organization’s information needs and provide you with a path to implementing complex system redesigns and simplify integrations. Putting health care redesign into action can be done in the following four general phases:

1. Getting started. The most important part of building a skyscraper is looking at the requirements, developing a blueprint and building a robust foundation. The first phase involves devising a strategic plan and assembling a leadership team to focus on quality improvement efforts. The team should include senior leaders, clinical champions (clinicians who promote the redesign), and administrative leaders. We need to develop a long-term strategy that sunsets legacy systems, consolidates business functions, build synergies between departments and aggregates data into a central repository. High-level needs assessments are performed, scope is defined to limit effort, and a change management process is created to assist in project management. A business governance committee determines what and when business decisions are implemented. Technical/architectural review committee approves the overall design and data governance of systems, interfaces and integrations of enterprise systems.

2. Review the complete electronic dataset. That includes building a corporate data dictionary (including pricing/benefits, membership, providers, claims, utilization, brokers, authorizations/referrals, reference data and code sets, etc.) and set priorities for improvement. The second phase involves gathering data to help inform the priorities for improvement. Once data requirements are gathered, performance measures such as NCQA/HEDIS that represent the major clinical, business, satisfaction, and operations goals for the practice can be identified. Corporate reporting and process needs are critical at this phase to look to ensure compliance and meeting internal and external customers’ requirements. The creation of dashboards and user reports that are easy to manage provide the right information at the right time can make the difference of cost savings and effective management throughout the organization. Using these dashboards allow users to keep an eye on the overall health and utilization of the services that they provide to their members.

One of the most helpful EDI integration practices I have found is to perform a source to target gap analysis between core claims/membership systems, my inbound/outbound EDI staging database, and the EDIFEC/GENTRAN mapping logic which translates the data to the outbound and from the inbound x12 EDI 837 Claims and 834 Membership enrollment files. This document also identifies any transformations, conversions or lookups that are needed from propriety values to HIPAA Standard values. By looking at every EDI Loop/Segment/Element and mapping it all the way through, I was able to identity data fields that were not being sent or being sent incorrectly. I give this mapping document as part of my technical specification documents to my EDI developers, which I customize for specific trading partners while I was reviewing the vendor’s companion guides.

3. Redesign care and business systems. The third phase involves organizing the care team around their roles, responsibilities, and workflows. The care team offers ideas for improvement and evaluates the effects of changes made. Determining how an enterprise integrates and uses often disparate systems is critical to determine timely, complete and accurate data/process flow. The design, creation and use of APIs and messaging technologies assist in getting information extracted, transformed and loaded (ETL) is critical, especially if information is to be used real-time web-based portals. Evaluation of easy to use yet robust batch process ETL tools, such as Informatica, become the cornerstone of any data integration project. Healthcare organization relay upon reporting tools to evaluate, investigate and reconcile information, especially with their financial and clinical systems. Imaging, workflow management and correspondence generation systems are used to create and manage the communications.

4. Continuously improve performance and maintain changes. The fourth phase includes ongoing review of clinical and financial integration outcomes and making adjustments for continued improvement. As we are looking to the future, we need to look at the IT architecture and its ability to expand with the ever-changing technology and needed capability models. Perficient is a preferred partner with IBM, Oracle and Microsoft with extensive experience for digital and cloud based implementations. Using these technologies gives our clients the ability to expand their systems, application servers to be spun up on demand based on need and growth, allow for failover, allow for redundancy, distributed and global databases to be employed, virtualization of software and upgrades be made while being transparent to the end users.

Perficient’s health information technology (IT) initiative for the integration of health information technology (IT) and care management includes a variety of electronic methods that are used to manage information about people’s health and health care, for both individual patients and groups of patients. The use of health IT can improve the quality of care, even as it makes health care more cost-effective.

Bringing in an Analytics/Reporting Platform

Implementing an enterprise data warehouse (EDW) or a data lake/analytic platform (DLAP) results in the standardization of terminology and measures across the organization and provides the ability to easily visualize performance. These critical steps allow for the collection and analysis of information organization-wide.

The EDW/DLAP aggregates data from a wide variety of sources, including clinical, financial, supply chain, patient satisfaction, and other operational data sources (ODS) and data marts.

It provides broad access to data across platforms, including the CEO and other operational leaders, department heads, clinicians, and front line leaders. When faced with a problem or question that requires information, clinicians and leaders don’t have to request a report and wait days or weeks for data analysts to build it.

The analytics platform provides clinicians and leaders the ability to visualize data in near-real time, and to explore the problem and population of interest. This direct access increases the speed and scale with which we achieve improvement. Obtaining data required to understand current performance no longer takes weeks or even months.

Application simplification takes the confusion as to the consistency and the accuracy of data within an organization. Per member/Per Month (PMPM) reporting is delivered in a standard format throughout, regardless of line of business.

The analytics platform delivers performance data used to inform organizational and clinician decision-making, evaluate the effectiveness of performance improvement initiatives, and increasingly, predict which patients are at greatest risk for an adverse outcome, enabling clinicians to mobilize resources around the patient to prevent this occurrence.

An analytics platform is incredibly powerful and provides employees and customers with the ability to easily visualize its performance, setting the stage for data-driven outcomes improvement. However, healthcare providers and payers know that tools and technology alone don’t lead to improvement.

To be effective, clinicians, IT, and Quality Assurance have to partner together to identify best practices and design systems to adopt them by building the practices into everyday workflows. Picking the right reporting and analytical tool and platform is critical to the success of the integration project.

Big data tools such Hadoop/HIVE/HUE and cloud technologies are used to bring together various data source together into a unified platform for the end-user.

Roadmap to Transformation

Perficient provides a full service IT roadmap to transform your healthcare organization and achieve both an increased personalization of care via the same path: digital transformation in healthcare. New health system technology, such as moving beyond basic EMR (Electronic Medical Record) infrastructure to full patient-focused CRM (Customer Relationship Management) solutions, has enabled healthcare organizations to integrate extended care teams, enhance patient satisfaction and improve the efficiency of care.

We connect human insight with digital capabilities in order to transform the consumer experience and deliver significant business value.

For more information on how Perficient can help you with your Healthcare IT integration and analytical needs, please see https://www.perficient.com/industries/healthcare/strategy-and-advisory-service

]]>