Over the next few blog posts, I will share how you can supercharge your firm’s data governance programs by leveraging data lineage capabilities. The information I will share with you is based on many years of experience leading and supporting large data governance initiatives in financial institutions. Whether you’re in commercial, retail, or investment banking, asset management, capital markets, payments, or insurance, you can benefit from the use of data lineage concepts, approaches, and tools.

In today’s post, I will discuss the fundamentals of data lineage and its role within a data governance framework.

Much has been written about data lineage. Its function is to trace and document the journey of data elements, from their point of inception to all datasets throughout an organization. Regardless of how many hops, downloads, uploads, and transformations these data elements undergo, a capable data lineage tool will track the journey from system to system and table to table and help stitch together gaps across the points of manual intervention and processing.

Data lineage tools are the utility of choice for unraveling and bringing order to complex, interdependent system flows and are instrumental in identifying the “golden source” of critical data elements in an enterprise.

For example, as Secured Overnight Financing Rate (SOFR) replaces London Inter-Bank Offered Rate (LIBOR), financial institutions have leveraged data lineage tools to find all references to LIBOR rates. While this is a perfect use case for a data lineage tool and exactly why these tools exist, they can play an even more prominent role in an organization by enabling firms to supercharge their data governance programs.

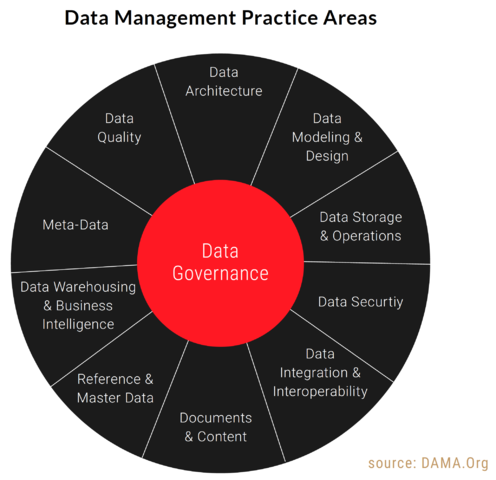

DAMA International’s DMBoK (Data Management Book of Knowledge) defines data governance as “The exercise of authority and control (planning, monitoring, and enforcement) over the management of data assets.”

In practice, data governance is the overarching term encompassing data ownership, privacy, control, security, and quality. The management of these attributes and metrics must be enforced throughout the organization, wherever the data is in transit, staged, stored, warehoused, or archived.

In support of data governance, specialized applications address one or more of these components. In some cases, it is with an integrated suite of applications, while in others, it is with a best-of-breed tool that addresses a single area.

Typically, a data governance program will leverage tools including:

- Business Glossary: Terms/definitions/classifications for key data elements

- Data Catalog: A centralized repository for all metadata elements across all datasets/tables in an enterprise

- Data Quality Rules: Quality rules for data elements; hardcoded, inferred from metadata, and/or AI/ML-based

When combined, these tools, if implemented broadly within the enterprise, effectively serve as a foundation for a data governance program. Key data elements can be fully annotated in business terms, the sensitivity of the information can be noted [e.g., personally identifiable information (PII), material non-public information (MNPI), etc.], and data repository scans by the catalog

can provide a comprehensive inventory of data fields/attributes/ characteristics. Whether assigned or derived via AI/ML inference, data quality rules can be applied at the source, repository (warehouse, lake, etc.), or at key usage points, such as regulatory reporting.

While effective, these tools, even if well integrated, yield a more manually intensive process than necessary and is ripe for gaps in control. It is in this context data lineage can transform the data governance process.

For example, if a key data element is marked at its source of origin as PII and is subsequently read, transformed, stored, downloaded, or uploaded by dozens of programs and systems through its journey, the catalog would only know of the resultant data field’s existence in the downstream data tables and files. At best, with the application of AI, the catalog might be able to infer, albeit without certainty, the field’s relationship to the key data element and its demarcation as PII.

The same loosely coupled association would apply to the business glossary and data quality rules. The further transformed or derived the resultant downstream field, the less likely even a well-trained AI/ML algorithm would be able to make the association.

Data lineage knows the whereabouts of all downstream locations of a given data element, whether unchanged, transformed, or the basis of a derivative field, and can provide the certainty needed to automatically apply the controls associated with the original element. The downstream instances (or spawn) of a given field can “inherit” the designations, privacy protections, quality rules, business terms, etc., from the source element.

When the various control attributes associated with all downstream instances of a data element are known, automated masking, encryption, and access rights can be enforced without potential gaps in governance, and without manually tagging each item.

In a similar context, the management of data access entitlements is a challenge for any data governance program. Knowing who can see sensitive data is as crucial as knowing where the data exists throughout the enterprise.

Typically, data access rights are granted on a file or table level, with some implementations driving the resolution down to the data element level, where it is supported by the associated storage capability (e.g., access to data elements within a flat file is an all-or-nothing affair). As those individuals or systems with access to data create downstream datasets containing copies or derivative versions of the original data, the original data owner (or assigned steward) often loses insight and control of the consequent entitlements.

As with the prior example of data sensitivity classifications, once data lineage is integrated into the entitlement process, any downstream datasets can inherit the entitlement restrictions associated with the original data elements, eliminating much of the manual maintenance of the function while ensuring sensitive information is protected from the onset and only viewable by those authorized to do so.

Beyond the improved visibility and control afforded by a lineage-driven data governance function, there are also significant financial benefits. Reducing the manual effort required to maintain the dynamic spawn and evolution of data across enterprise results in significant cost savings, and in turn, a superior ROI.

In my next post, I will discuss what an automated data lineage solution looks like.

In the meantime, if you are interested in learning more about this topic, consider downloading our Supercharging Data Governance in Financial Services With Data Lineage guide.