Security threat assessment models are an important tool of an overall security and compliance program. In order to create an effective set of security policies, it is necessary to understand the types of threats, their likelihood of occurrence, the impact of a breach/incident, and how the business can mitigate or control against these threats. There are many different threat analysis techniques that have been developed for various industries. These approaches all involve some degree of systematic “divide-and-conquer,” where the security space is divided into categories that are then investigated. For example, the HITRUST Cyber Security Framework (CSF) divides the overall organization security space into 14 key categories. When performing a threat assessment it is good practice to focus on areas of high business impact, as will be discussed below.

Key Concepts

There are a few key security concepts that should be considered while conducting a threat assessment. These include coverage of confidentiality, integrity, accessibility (CIA). Confidentiality refers to the ability of a system to keep secret information secure against unauthorized access. Integrity ensures that the information remains correct, consistent, and complete. Accessibility provides that information is available to those authorized for review/modification. When considering threats to a particular system make sure to include all three CIA areas.

The definition of security risk is any human or environmental impact that could disrupt information confidentiality, integrity or accessibility. There are literally hundreds (if not thousands) of possible risks, but usually, there is only a limited subset that is applicable to any given situation. For example, an unencrypted database information store is subject to possible intrusion or data loss but is not very likely to be physically stolen from a data center. Likewise, a physical plant element (i.e. network cable) does not need to be “encrypted” to ensure the security of the element.

Control represents mitigation against a defined security risk. These can take many forms, both administrative and technical, and are intended to provide a level of protection. While many controls are general in nature (e.g. data encryption), some controls are defined after a threat is identified so they may be properly tailored against the specific threat. For example, the threat of a critical system failure can be mitigated by high-availability techniques (e.g. multiple data center deployment and active-active automatic failover).

FRAP – Facilitated Risk Analysis Process

One well-established threat assessment technique is the Facilitated Risk Analysis Process (FRAP). In this approach, it is the business value that drives the threat assessment rather than from a pure security/compliance viewpoint. This is important for several reasons. First, it leverages the internal experience and expertise of the various teams, rather than relying on external groups to discover the risks and provide necessary controls. Second, it is a lightweight approach that is workshop based. This avoids a common problem with organizational threat assessments in that they can take months if not years to perform. Finally, it is based on a qualitative rather than quantitative methodology. It is often difficult to precisely determine the probability and business impact for any particular risk, so using categories rather than values simplifies the analysis. As illustrated in Figure 1, a qualitative approach allows the relative grouping of identified risks based on the probability of occurrence vs. the expected business impact. Any risks in the ‘red’ area must be addressed, risks categorized as ‘orange’ should be addressed, and the remainder may be addressed as resources permit.

Figure 1. Likelihood of Occurrence vs. Business Impact

Methodology

FRAP is based on a series of threat discovery sessions. These are typically held by a team assembled for this purpose, derived from across the organization. In general, the approach is as follows:

- The pre-FRAP meeting takes about an hour and has the business manager, project lead, and facilitator. The focus is on scope, team construction, modeling forms, definitions, and meeting mechanics

- The FRAP session takes approximately four hours and involves ideally 7 to 15 people. A facilitated workshop approach is used that addresses “what can happen” and “what is the consequence if it did?”

- FRAP analysis and report generation usually takes 4 to 6 days and is completed by the facilitator and scribe.

- Post-FRAP read-out session takes about an hour and has the same attendees as the pre-FRAP meeting.

As part of the final readout, the FRAP team should capture all investigated systems, what risks were identified for those systems, and recommended controls/mitigations for those risk areas.

The results of the FRAP are a comprehensive document that identifies threats, assigns priorities to those threats and identifies controls that will help mitigate those threats.

Reference: Peltier, T., “Facilitated Risk Analysis Process (FRAP)”, Auerbach Press (2000)

OCTAVE – Operational Critical Threat, Asset, Vulnerability, Evaluation

The intended audience for the original OCTAVE method are large organizations with 300 or more employees. Due to the high overhead associated with the original approach, several variants have been developed to allow smaller groups to conduct security risk assessments without needing to gain full organizational approval (e.g. OCTAVE Allegro). This approach is similar to the FRAP technique in that facilitated workshops and questionnaires are used to collect and organize the assessment information.

More specifically, it was designed for organizations that

• have a multi-layered hierarchy

• maintain their own computing infrastructure

• have the ability to run vulnerability evaluation tools

• have the ability to interpret the results of vulnerability evaluations

The goals of the approach are to:

- Establish drivers, where the organization develops risk measurement criteria that are consistent with organizational drivers.

- Profile assets, where the assets that are the focus of the risk assessment are identified and profiled and the assets’ containers are identified.

- Identify threats, where threats to the assets—in the context of their containers—are identified and documented through a structured process.

- Identify and mitigate risks, where risks are identified and analyzed based on threat information, and mitigation strategies are developed to address those risks.

The methodology is illustrated in Figure 2 and is broken further down in to a series of steps:

Step 1 – Establish Risk Measurement Criteria

A formal measurement approach is identified for every risk that is discovered/determined. This may be similar to the approach used for FRAP (see Figure 1) or may involve more dimensions, such as breaking business impact into loss of revenue, loss of reputation, loss of regulatory compliance (e.g. R3 Losses). Regardless of the measurement approach, it should be consistently applied across all discovered risk areas.

Step 2 – Create an Information Asset Profile

The assets under consideration include physical, informational, monetary, proprietary, and other types of business value areas. These should include applications as well as the information/data processed by those applications. Many large organizations capture this type of information in a configuration management database (CMDB). The assets may be further categorized by subject area (e.g. formulary, intellectual property, customer data, etc.).

Step 3 – Organize Assets into “Containers” (stored, processed, transferred)

The assets are assigned into “containers” which represent where they will “live” within the organization. Software systems are typically contained within servers (note: this applies to cloud-based resources as well as on-premise). Data is often stored in various data stores (e.g. relational database) that is then implemented on physical disks. Information may be stored, transferred and processed in different “containers” during its lifetime.

Step 4 – Identify Areas of Concern

For all collected assets, determine where threats exist and are of concern to the enterprise. To help focus the discovery effort, decide on what is most important to the business function. For example, an enterprise focused on manufacturing will be concerned with supply chains, delivery models, customer sales, and through-put. A financial institution will be concerned with ensuring the integrity of transactions, providing customers with access to their financial data, and timely processing of business events (e.g. trades, contracts, transfers, etc.).

Step 5 – Establish Threat Scenarios

Threat scenarios are an exercise in “what if”. This means for every identified asset determining what could possibly cause disruption or loss. This requires both a detailed understanding of the asset in question and the possible risks to that asset.

Step 6 – Identify and Analyze Risks

For each threat scenario, there will be one or more vulnerabilities or risks involved with that threat. For example, the threat of data loss can be caused by fire (environmental), a data breach exposing sensitive information (human actor), or a flaw in the design of the system itself (systematic).

Step 7 – Select Mitigations

Once all threats and risks are identified for the selected assets, a set of mitigations can be proposed against those threats. These may include administrative or technical controls.

Reference: Caralli, R.A., et. al., “Introducing OCTAVE Allegro,” Carnegie Mellon (2007); Alberts, C. & Dorofee, A. “Managing Information Security Risks: The OCTAVE Approach.” Boston, MA: Addison-Wesley, 2002 (ISBN 0-321-11886-3).

ISO27005 – Risk Assess for Information Systems

Another approach to threat analysis is provided by the ISO standards. One difference, however, is that the ISO standard doesn’t specify, recommend or even name any specific risk management method. It does, however, imply a continual process consisting of a structured sequence of activities, some of which are iterative:

- Establish the risk management context (e.g. the scope, compliance obligations, approaches/methods to be used and relevant policies and criteria such as the organization’s risk tolerance);

- Quantitatively or qualitatively assess (i.e. identify, analyze and evaluate) relevant information risks, taking into account the information assets, threats, existing controls and vulnerabilities to determine the likelihood of incidents or incident scenarios, and the predicted business consequences if they were to occur, to determine a ‘level of risk’;

- Treat (i.e. modify [use information security controls], retain [accept], avoid and/or share [with third parties]) the risks appropriately, using those ‘levels of risk’ to prioritize them;

- Keep stakeholders informed throughout the process; and

- Monitor and review risks, risk treatments, obligations and criteria on an ongoing basis, identifying and responding appropriately to significant changes.

The organization should establish and maintain a procedure to identify requirements for:

- Selection of risk evaluation, impact, and acceptance

- Definition of scope/boundaries for information security risk management

- Risk evaluation approach

- Risk treatment and risk reduction plans

- Monitoring, review, and improvement of risk plans

- Asset identification and valuation

- Risk impact estimation

Reference: ISO/IEC 27005:2018 – Information security risk management

FEMA – Failure Effect and Mode Analysis

Originally developed in the 1950s to study failure problems with military equipment, it has since been revised to apply to a wide array of system reliability assessments. This approach is a very formal review of assets and all of the possible failure modes. It is intended to be a very thorough analysis that is intended to “leave no stone unturned”. As such it should only be used when highly critical assets are required to be reviewed to this level of detail (e.g. regulations or business requirements).

The analysis should always be started by listing the functions that the design needs to fulfill. Functions are the starting point of FMEA, and using functions as baseline provides the best yield of an FMEA. After all, a design is only one possible solution to perform functions that need to be fulfilled. This way an FMEA can be done on concept designs as well as detail designs, on hardware as well as software, and no matter how complex the design.

Worksheets are used to uniformly capture the details of each possible failure mode: (source: Failure Mode and Effect Analysis – Wikipedia)

|

FMEA Ref.

|

Item

|

Potential failure mode

|

Potential cause(s) / mechanism

|

Mission Phase

|

Local effects of failure

|

Next higher level effect

|

System Level End Effect

|

(P) Probability (estimate)

|

(S) Severity

|

(D) Detection (Indications to Operator, Maintainer)

|

Detection Dormancy Period

|

Risk Level P*S (+D)

|

Actions for further Investigation / evidence

|

Mitigation / Requirements

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1.1.1.1 | Brake Manifold Ref. Designator 2b, channel A, O-ring | Internal Leakage from Channel A to B | a) O-ring Compression Set (Creep) failure b) surface damage during assembly | Landing | Decreased pressure to main brake hose | No Left Wheel Braking | Severely Reduced Aircraft deceleration on ground and side drift. Partial loss of runway position control. Risk of collision | (C) Occasional | (V) Catastrophic (this is the worst case) | (1) Flight Computer and Maintenance Computer will indicate “Left Main Brake, Pressure Low” | Built-In Test interval is 1 minute | Unacceptable | Check Dormancy Period and probability of failure | Require redundant independent brake hydraulic channels and/or Require redundant sealing and Classify O-ring as Critical Part Class 1 |

|

Rating |

Meaning |

|---|---|

| A | Extremely Unlikely (Virtually impossible or No known occurrences on similar products or processes, with many running hours) |

| B | Remote (relatively few failures) |

| C | Occasional (occasional failures) |

| D | Reasonably Possible (repeated failures) |

| E | Frequent (failure is almost inevitable) |

Reference: D. H. Stamatis, “Failure Mode and Effect Analysis: FMEA from Theory to Execution.” American Society for Quality, Quality Press (2003)

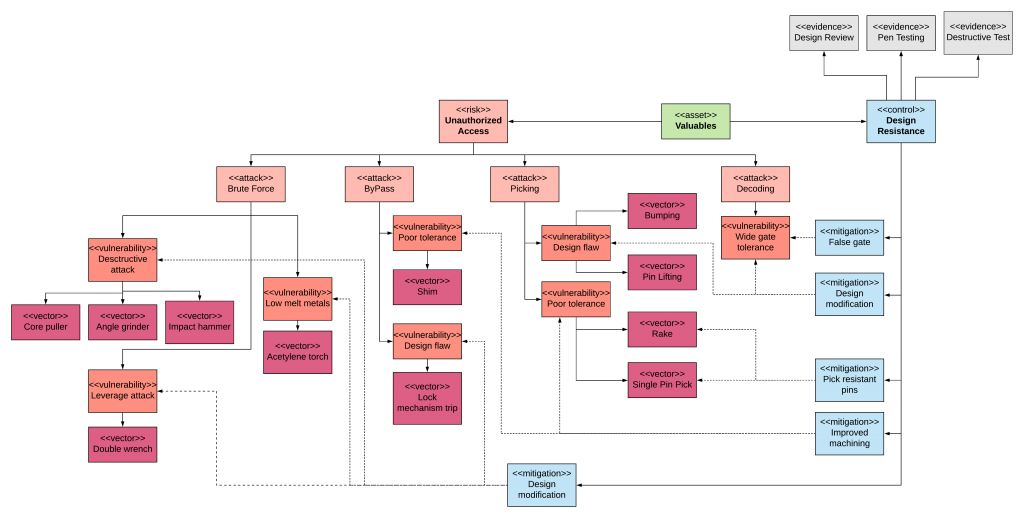

Attack-Vulnerability-Vector Risk Modeling

Yet another approach to Threat Assessment is to capture the organizational risk analysis result in an Attack-Vulnerability-Vector risk model. As illustrated in Figure 4, This approach uses a visual modeling technique to tie assets to risks and controls. Moreover, it breaks down the information into a collection of attack-vulnerability-vector subgroups that can then be assigned an appropriate control. In the example below, a physical lock secures the asset. The analysis shows the ways such a device can be subverted through picking, decoding, bypassing, or brute-force attack. One or more vulnerabilities exist that are being leveraged in the attack, such as the use of an angle grinder in a destructive attack. Mitigations are used to combat vulnerabilities reducing or eliminating the associated attack-vector.

Figure 4. Physical Lock Threat Model – Unauthorized Access

Conclusion

While threats will always exist to our systems and practices, there are a number of ways to discover and mitigate against these risks. In this post, we looked at several common approaches to discovery, mitigation, and remediation of software system threats. In future posts, we will investigate creating security policies in such a way as to facilitate development and methods for the determination of software system attack surfaces.

Good morning Benjamin. Great article. Was hoping that you could possibly edit the “HITRUST” reference in the first/opening paragraph. We appreciate the mention and acknowledgement of our CSF industry-leading framework. Any chance you could just edit our brand name to “HITRUST” in all caps? TY

Done. My apologies for not getting the trademark correct.