The voice revolution is coming. But how soon, and how much is happening now? What are the opportunities for businesses? This guide to voice answers those questions and more.

Is Voice Usage Growing?

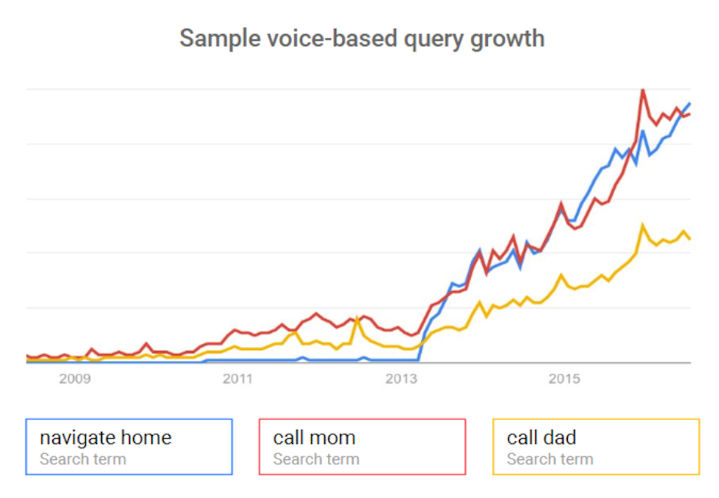

The answer is a resounding “yes,” as shown by this chart shared by Bing’s Purna Virji at Pubcon Fort Lauderdale in April 2018:

Clearly, the growth in usage is rapid, but what’s missing from the data is anything that shows the absolute value of that growth. We also have the prediction from Andrew Ng, Chief Scientist of Baidu, who suggests that 50% of all searches will be by either voice or images by 2020 (just two years away!).

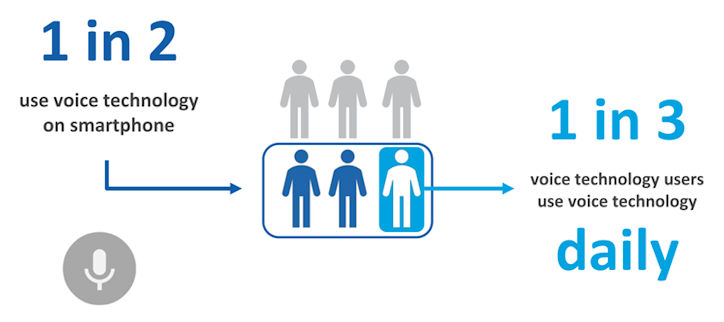

And there’s this data from comScore:

Note that none of this data shows the actual percentage of total searches performed by voice (Andrew Ng’s forecast was just a prediction). So where are we then? These three points may temper our excitement:

- I use both Siri and Google Assistant on my iPhone regularly. I have multiple Amazon Echo and Google Home devices at home. I often need to repeat commands to get them recognized. For example, I might come into my kitchen and say, “Hey Google, turn on the kitchen lights,” and need to repeat it three times to get it to execute that command. The same type of thing happens with Alexa. The point is that there are still real issues with the speech recognition side of things.

- Many factors impact the quality of speech recognition, such as the wide range of types of voices (not to mention different languages, but let’s not add that complication for now) and the background noise in the environment where the device is trying to listen to a human speaker.

- Humans solve these problems with relative ease. The machines are still developing a similar level of capability. They’re going to need to make a ton of progress on this front before voice use becomes ubiquitous, and this may take years to unfold.

In other words, I disagree with Andrew Ng’s projection. We won’t be at 50% in 2020. That said, this revolution IS coming, and you need to get ready for it. It may be many years away before it becomes more popular than typing in search queries, but the time is coming. And remember, the majority of voice usage may not be in the form of voice search queries at all.

Voice Search. Or Is It?

As an industry, everyone seems to refer to what’s unfolding as “voice search.” That refers to replacing the traditional search box where we type in a query with spoken search commands. At this level of definition, this would not include commands like “Call Mom.”

I also wouldn’t consider using voice to text to message a friend as a voice search. Or setting a timer or alarm on your phone. Yet, personal assistants like Google Assistant, Alexa and Siri do consider these examples of voice usage.

I think we should be tracking the total landscape of voice interactions between humans and devices. Searching for answers to questions or navigating to websites remains a big part of what personal assistants will do for us, but it’s much bigger than that. So, voice search is a poor label for that. What should we use instead? How about “voice interactions?”

[Tweet “Why voice interactions is a better term than voice search for the emerging voice-activated device revolution.”]

What’s Driving the Growth of Voice Interactions?

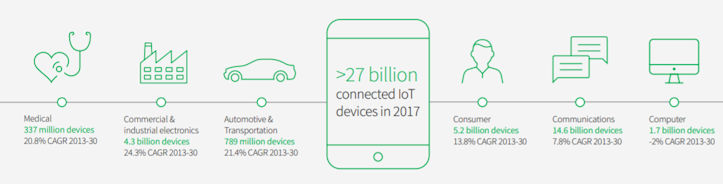

One of the major drivers is the growth of installed Internet of Things (IoT) devices. IoT refers to the different types of devices that are connected to the internet, including watches, cars, smart TVs, smart refrigerators, and more. Just how far has this come? According to IHS Markit, there were more than 27 billion IoT devices by the end of 2017:

Notice the base estimate of 1.7 billion PCs. While IHS Markit does not separate out the number of smartphones and tablets, Seeking Alpha estimates smartphones at 5 billion, and Statista estimates tablets at 1 billion. These three categories add up to 7.7 billion. That tells us that as of 2017, 72% of all the internet-connected devices are something other than a PC, smartphone or a tablet.

That means that 72% of the connected devices out there likely don’t have a search box or a traditional browser to work with. So how will users communicate with those devices? Ah yes—voice interaction seems like a great answer to that question! Important note: Of course, many of the IoT devices may not have any human interaction at all, but nonetheless, that category is seeing amazing growth as well.

We have data from Apple indicating that Siri is actively used on 500 million devices and data from Google that Google Assistant is available on more than 400 million devices.

We also see the rise of usage of smart speakers. According to Juniper Research, they will be in 55% of U.S. households by 2022.

Personal assistants tend to have a very high level of voice interactions, and smart speakers (which are a subclass of personal assistants) are designed to be voice-centric. These devices will help drive voice adoption rapidly higher.

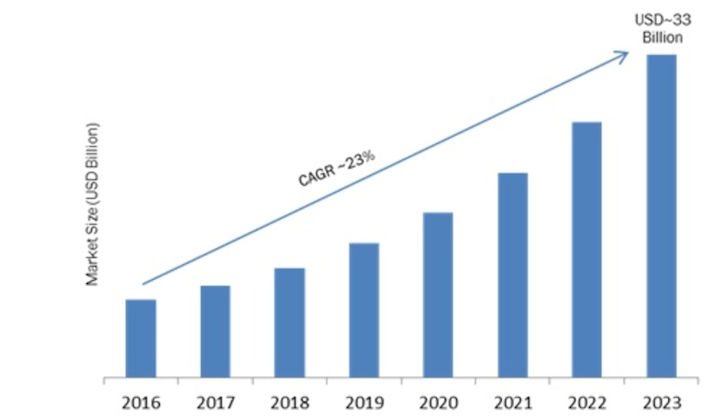

Other types of IoT devices that users will interact with are seeing very high growth as well. For example, smart thermostat sales are predicted to reach 14 million in the U.S. and Canada in 2021 and reach around 12 million in Europe in the mid-2020s. And, smart appliances, such as refrigerators, are seeing explosive growth as well:

Source: Market Research Future

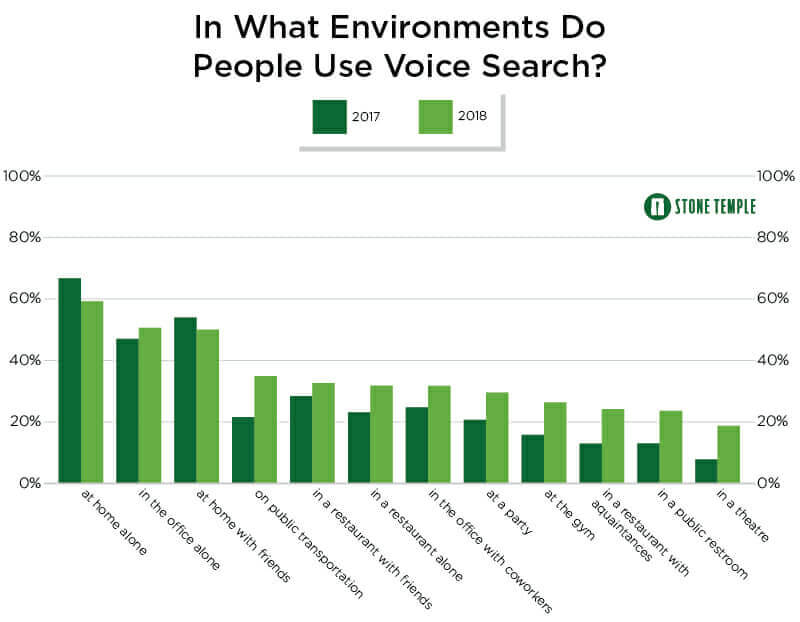

We are seeing a major trend of users growing more comfortable using voice with their devices in public. At Stone Temple (now Perficient Digital!), we did a survey of voice usage by more than 1,000 users in both 2017 and 2018. Here is what a year-over-year comparison showed for voice usage in different environments:

What Makes Voice Search Different?

I am going back to the term “voice search” on purpose for this section. There is a class of voice interactions that is clearly voice search. For example, a user might ask their Alexa device, “How old is Barack Obama?” and they’ll get an answer. Where do these responses come from? There are three sources:

- Featured snippets

- Public domain information

- Licensed databases of information

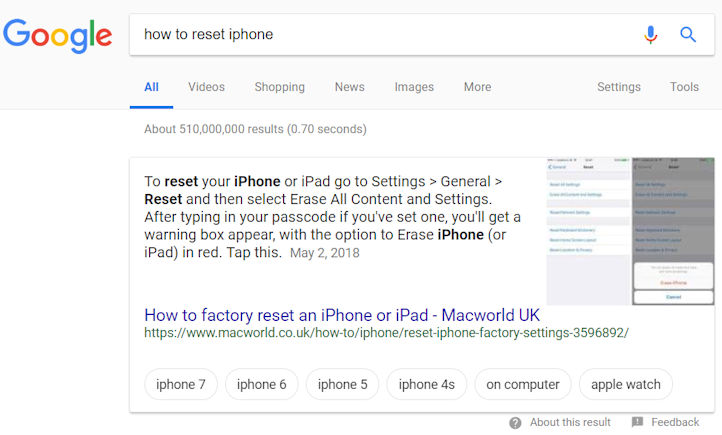

What is a Featured Snippet?

A featured snippet is information extracted from a third-party website and presented in the search results above the rest of the organic search results. Featured snippets are identifiable because they include a link to the source page from which the information was drawn. Featured snippets are available as a data source for search engines like Google and Bing, but not to Amazon or Apple for their personal assistants. Here is an example of a featured snippet:

What is Public Domain Information?

This is information that is generally known to the public and is not possible to copyright. An example of public domain information is the fact that the capital of Texas is Austin. One large source of public domain information is the U.S. government, which pushes a lot of census data into the public domain, as well as data from other sources. All the players in the voice interactions arena have built their own databases of public domain information and continue to enhance those on a regular basis.

What are Licensed Databases of Information?

These are databases of information from businesses that collect such information and license that data to third parties. An example of this is song lyrics. Lyrics are subject to copyright law, and even if you know what they are, you can’t republish them without permission. In Google, if you search for “walk this way lyrics,” you will get the complete lyrics presented above the regular search results because Google has licensing deals in place for the lyrics to most popular songs (including “Walk This Way”). Players like Amazon and Apple need to get all their non-public domain answers via some form of licensing.

The Opportunity with Featured Snippets

Featured snippets are used by Google and Bing in response to regular (e.g. typed) search queries and get top-tier positioning in the search results, so it’s essential to learn how to get your site selected as a source for featured snippets. But they also play a large role in the world of voice search.

In a voice search world, you get one answer in response to your query. Research has shown that a large percentage of the responses to voice search queries comes in the form of a featured snippet. Therefore, if you’re not the source of that one answer, you get no visibility at all. Literally none. As a result, participation in the voice search ecosystem demands that you develop skills for obtaining featured snippets. That will help drive growth for your traditional organic search traffic as well.

You can learn more about how to get featured snippets in this eight-step guide.

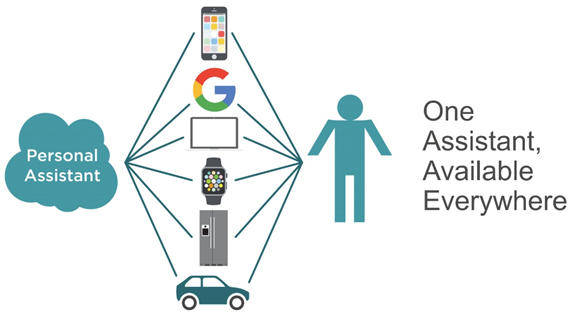

The Role of Personal Assistants

It’s natural to associate your personal assistant with the device it’s on—Alexa with your Amazon Echo, Google Assistant with your smartphone or Google Home device and so forth. However, these personal assistants reside in the cloud, and there is no need for them to have any tie to a specific device at all. You can view the relationship between you, the devices, and your personal assistant like this:

An example of how this might work could be: You’ll be able to start a conversation with your cloud-based personal assistant through your TV, go to the kitchen and continue the dialog through your refrigerator, and then as you get into your car to go to the store, finish the conversation there. Reinforcing this flexibility is the fact that the personal assistant will recognize your voice, so there will be no need to “log in” to start or continue the dialogue.

As we saw in the data from Bing, one of the most popular uses of personal assistants today is to ask informational questions. This gives rise to the question of which one is smartest. In a study conducted here at Stone Temple (now Perficient Digital), we found that Google Assistant is the smartest, but Amazon’s Alexa and Bing’s Cortana are not far behind.

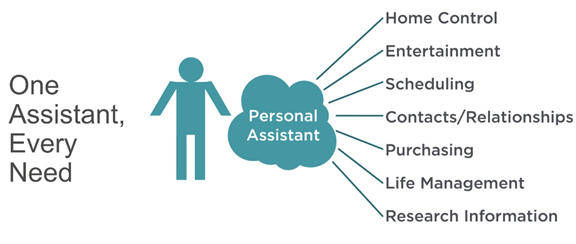

Another major aspect of personal assistants is that they will be developed over time to address nearly all your online needs. Want to book a trip to the Caribbean for a winter vacation? You’ll be able to use your personal assistant. Want to program a schedule for all your lights, heating and cooling systems, and other aspects of your house? You’ll be able to do that in your personal assistant too. And, of course, buying stuff online, getting answers to questions and doing research will all be part of the package.

The good news for businesses is that companies like Google, Amazon, Apple, and Microsoft will require third parties to develop apps to run within their platforms. This is where opportunities lie for the rest of us to benefit from the rise of these platforms. We’ll examine that next.

Building a Personal Assistant App

Many companies are already building Alexa Skills and Actions on Google Apps. These apps exist in the Alexa and Google Assistant ecosystems and can be provided to users who know about them. In the Alexa environment, an explicit act of installing the Skill must be done by the user before it can be used. In the Google Assistant environment, the app can be invoked one of two ways:

- The user invokes the app by name, in a query like: “Ask Perficient Digital: what is a NoIndex tag.” Note that no prior step of installing the Perficient Digital Action is required.

- The user asks a query that the Action has an answer for but does not explicitly invoke the Action. However, Google decides to suggest your Action to the user. These are referred to as Implicit Queries, and they represent one of the exciting opportunities with Actions on Google Apps. Basically, Google is giving you brand exposure to users who did not know about your Action app.

The basic concept of building a Skill or an Action can be quite simple. For example, you can develop a Skill/Action that answers simple questions for users. The Perficient Digital Skill/Action answers questions related to SEO and digital marketing. As shown above, you can ask it questions like, “What is a noindex tag?” or “What does a nofollow tag do?” and it will respond with the answer.

Try out the Perficient Digital Skill/Action apps!

The place to start is with Dialogflow.com. This website is from Google and will help you build an Action app. However, this very same site can also be used to output apps for many other platforms such as Facebook Messenger, Slack, Viber, and Twitter. It also outputs JSON code that can easily be modified to create an Alexa Skill.

The key thing to understand is that you will need to build a very detailed language model. That means for a question like, “What is a NoIndex tag?” you need to specify the phrase variations you will support. A few examples of these are:

- What does a NoIndex tag do?

- Tell me what a NoIndex tag does.

- How does the NoIndex tag work?

- What is it that a NoIndex tag accomplishes?

Unless you specify the major phrase variants, neither Alexa nor the Google Assistant can process the phrases mentioned above. This can be a lot of work!

Once you’ve developed the App, you can run it in test mode before submitting it for certification. This is true for both Alexa and Google Assistant.

Personas in Voice Apps

One last important thing, and one that many brands are overlooking, is the role of personas. You can, should, and must project your brand persona via your voice app.

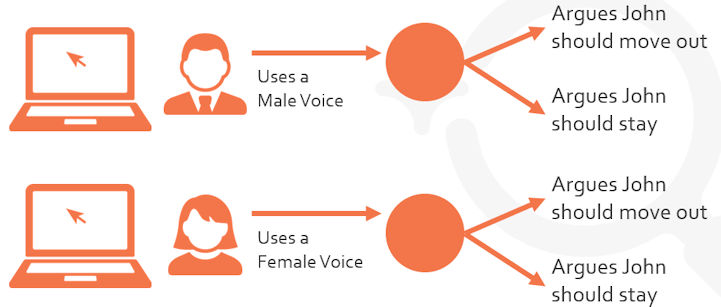

At the heart of the reason why is because humans instinctively attach a personality to a voice, be it from a human or a machine. In fact, a Stanford University study by Eun-Ju Lee, Clifford Nass, and Scott Brave showed that computer-generated speech can have gender. To prove this, the study created a basic dilemma:

Amy and John are roommates in an apartment and are not romantically involved. However, Amy’s parents think that she’s rooming with another woman, and now they’re coming out for a visit. So, the question posed in the study was, “Should Amy ask John to move out during the visit?” In the study, an equal number of men and women were split into four groups, and each group had a computer voice make an argument for how to resolve this, either by arguing that Amy should ask John to move out, or that John should stay. Further, two different voices were used, one female voice and one male voice.

The net result is that eight tests were conducted as follows:

- One group of women heard an argument by a male voice that John should move out.

- One group of women heard an argument by a male voice that John should stay.

- One group of women heard an argument by a female voice that John should move out.

- One group of women heard an argument by a female voice that John should stay.

- One group of men heard an argument by a male voice that John should move out.

- One group of men heard an argument by a male voice that John should stay.

- One group of men heard an argument by a female voice that John should move out.

- One group of men heard an argument by a female voice that John should stay.

All the groups were of equal size. The study showed that both men and women were more likely to accept the argument made by a voice in their own gender.

Another study by Clifford Nass and Kwan Min Lee showed that computers can have personality as well. In fact, people will recognize a computer’s voice as introverted or extroverted, and they’re more likely to like a voice similar to their own personality type.

From a voice app perspective, understanding these dynamics is incredibly important. Your brand should have its own persona, and it should match up with the preferences of the target audience for your business. Using the default voices provided by Alexa or the Google Assistant is just not going to cut it.

You’ll need to develop a persona strategy, find the right voice talent and invest in developing a persona that will best serve your brand’s interests.

For more information on voice personas and persona design, check out the presentation that Duane Forrester and I jointly did at SMX Advanced.

I can’t believe that voice search will be 50% by only 2020. Maybe for the Call Home, Call Mum etc. but anything more than that will be keyed in.

Great, just another good reason to start creating quality content on your site.

The personas in voice apps is interesting, it would be great to do this kind of study over something like news readings, like how the Echo does the Daily Briefing; just to see how news stories of a political nature are received and how different personas reading said news could potentially influence certain demographics come election time.

Completed agree with you, I do not believe that voice and image search will make up 50% of searches. Currently, it is still flawed but I can see it becoming a step to integrate simple service commands such as; “hey Google order me a pizza”.

Voice text is also going to be a big deal, I see it becoming a popular trend for Gen X and Gen Y audiences. It will also be an ideal service for special need individuals that may have issues physically texting.

All in all great post and I am looking forward to the future of voice search and how it may impact our day to day lives.

Voice needs to get a heck of a lot better at accents before it’s going to be useful! My Bostonian family can’t get Siri or Alexa to consistently recognize their accents. It’s not until we read texts aloud with an accent and decipher them before they make sense. Unless the progress on that picks up, I can’t see that working in 2020. Really interesting about the gender preferences though. Thanks for the insight, Eric!