This page contains the 2018 update to our Digital Personal Assistants accuracy study. In this new edition of the study, we tested 4,942 queries on five different devices:

- Alexa

- Cortana Invoke

- Google Assistant on Google Home

- Google Assistant on a Smartphone

- Siri

In this study, we compare the accuracy of each of these personal assistants in answering informational questions. In addition, we’ll show how these results have changed from last year’s edition of this study, which may be found here.

For those of you who want the TL;DR report, here it is:

- Google Assistant still answers the most questions and has the highest percentage answered fully and correctly.

- Cortana has the second highest percentage of questions answered fully and correctly.

- Alexa has made the biggest year-over-year gains, answering 2.7 times more questions than in last year’s study.

- Every competing personal assistant made significant progress in closing the gap with Google.

If you’re not interested in any background and just want to see the test results, jump down to the section called “Which Personal Assistant is the Smartest?”

What is a Digital Personal Assistant?

A digital personal assistant is a software-based service that resides in the cloud, designed to help end users complete tasks online. These tasks include answering questions, managing their schedules, home control, playing music, and much more. Sometimes called personal digital assistants, or just personal assistants, the leading examples in the market are Google Assistant, Amazon Alexa, Siri (from Apple), and Microsoft’s Cortana.

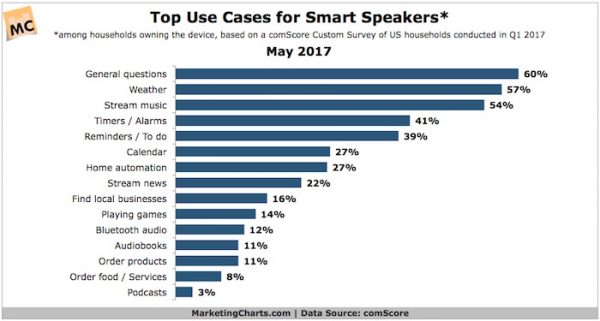

Do People Ask Questions of Digital Personal Assistants?

Before we dig into our results, it’s fair to ask whether or not getting answers to questions is a primary use for devices like Google Home, Amazon Echo, etc. As it turns out, a survey by Comscore showed asking general questions as the number one use for such devices!

Structure of the Test

We collected a set of 4,952 questions to ask each personal assistant We asked each of the five contestants the identical set of questions, and noted many different possible categories of answers, including:

- If the assistant answered verbally

- Whether an answer was received from a database (like the Knowledge Graph)

- If an answer was sourced from a third-party source (“According to Wikipedia …”)

- How often the assistant did not understand the query

- When the device tried to respond to the query, but simply got it wrong

All four of the personal assistants include capabilities to help take actions on your behalf (such as booking a reservation at a restaurant, ordering flowers, booking a flight), but that was not something we tested in this study. We focused on testing which of them was the smartest from a knowledge perspective.

Which Personal Assistant is the Smartest?

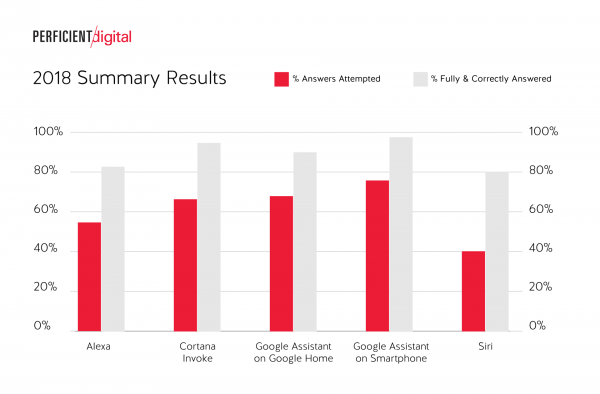

Here are the basic results of our 2018 research:

Here is How the Results are Defined:

1. Answers Attempted means that the personal assistant thinks that it understands the question, and makes an overt effort to provide a response. This does not include results where the response was “I’m still learning” or “Sorry, I don’t know that” or responses where an answer was attempted but the query was heard incorrectly (this latter case was classified separately as it indicates a limitation in language skills, not knowledge). I’ll expand upon this definition more below.

2. Fully & Correctly Answered means that the precise question asked was answered directly and fully. For example, if the personal assistant was asked, “How old is Abraham Lincoln?”, but answered with his birth date, that would not be considered fully and correctly answered.

Or, if the question was only partially answered in some other fashion, it was not counted as fully and correctly answered either. To put it another way, did the user get 100% of the information that they asked for in the question, without requiring further thought or research?

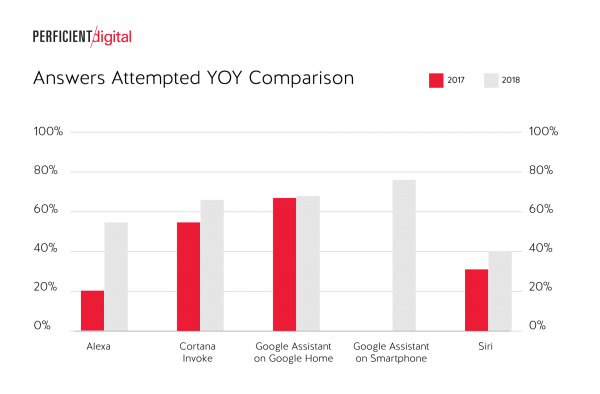

Next, here is a comparison of answers attempted for 2017 and 2018 (note: we did not run Google Assistant on a Smartphone in 2017, which is why this is shown only for 2018):

All four personal assistants increased the number of attempted answers, but the most dramatic change was in Alexa, which went from 19.8% to 53.0%. Cortana Invoke saw the second-largest increase in attempted answers (53.9% to 64.5%), followed by Siri (31.4% to 40.7%).

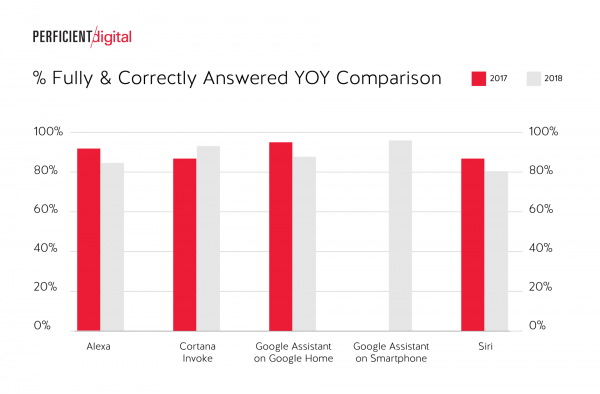

Now, let’s take a look at the completeness and accuracy comparison:

The only assistant that increased its accuracy year over year was Cortana Invoke (86.0% to 92.1%). Alexa decreased its fully and correctly answered level a bit, but in light of increasing its attempted responses by 2.7x, this decrease is not a bad result.

Note that the 100% complete correct requires that the question be answered fully and directly. As it turns out, there are many different ways to not be 100% correct or complete:

- The query might have multiple possible answers, such as, “How fast does a jaguar go?” (“jaguar” could be an animal, a car, or a type of guitar).

- Instead of ignoring a query that it does not understand, the personal assistant may choose to map the query to something it thinks of as topically “close” to what the user asked for.

- The assistant may have provided a partial correct response.

- The assistant may have responded with a joke.

- Or, it may simply get the answer flat-out wrong (this was pretty rare).

You can see more details on the nature of the errors in a more detailed analysis below.

A few summary observations from the 2018 update to this data:

- Google Assistant still answers the most questions and has the highest percentage which are answered fully and correctly.

- Cortana has the second-highest percentage of questions answered fully and correctly.

- Alexa made the biggest year-over-year gains, answering 2.7 times more questions than in last year’s study.

- Every competing personal assistant made significant progress in closing the gap with Google.

Exploring the Types of Mistakes Personal Assistants Make

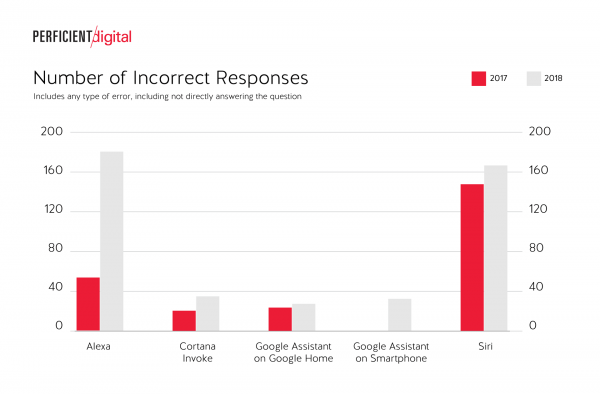

So how often do the personal assistants simply get the answer wrong? Here is a quick look:

Alexa had the most incorrect responses, but also scaled the number of questions responded to the most by far. Siri was right there with Alexa in the number of incorrect answers, but this only increased marginally over the 2017 results.

Many of the “errors” for both Alexa and Siri came from poorly structured queries or obscure queries, such as, “What movies does The Rushmore, New York appear in?” More than one-third of the queries generating incorrect responses in both Alexa and Siri came from similarly obscure queries.

After extensive analysis of the incorrect responses across all five tested scenarios, we found that basically all of the errors were obvious in nature. In other words, when a user hears/sees the response, they’ll know that they received an incorrect answer response.

Put another way, we did not see any wrong answers where the user would be fundamentally misled. An example of this would be if a user asks, “How many centimeters are in an inch?” and receives the response, “There are 2.7 centimeters in an inch.” (The correct answer is 2.54, by the way.)

Examples of Incorrect Answers

In our test, we asked all the personal assistants, “Who is the voice of Darth Vader?” Here is how Siri responded:

As you can see, it responds with a list of movies that include Darth Vader. The response is topically relevant, but it doesn’t answer the user’s question.

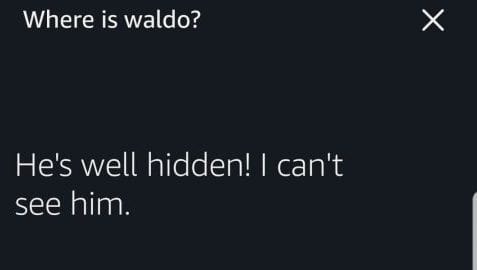

Next, let’s look at one from the Google Assistant, with the question, “How to make sand?”

Note that if you’re looking to make something that is sand-like for your kids to play with, this would be the correct answer.

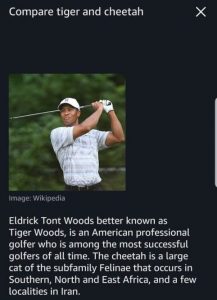

On Alexa, an example error is the query “Compare tiger and cheetah.” Here is the result:

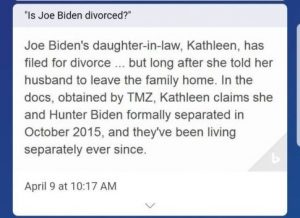

Last, but not least, here is one for Cortana: “Is Joe Biden divorced?”

Once again, the assistant had the right general idea, but the question is not really about his daughter in law.

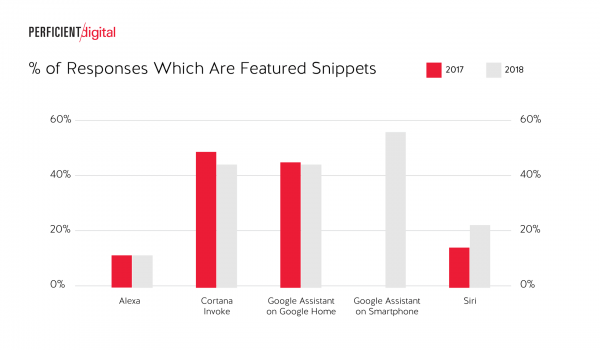

Featured Snippets

Another area we explored was the degree to which each personal assistant supports featured snippets. What are featured snippets? These are answers provided by a personal assistant or a search engine which has been sourced from a third party. They are generally recognizable because the personal assistant or search engine will provide clear attribution to the third party source of the information.

Let’s take a look at the data.

As you can see, there is not much movement among the assistants year over year, except for Siri, whose use of featured snippets increased from 14.3% to 23.0%.

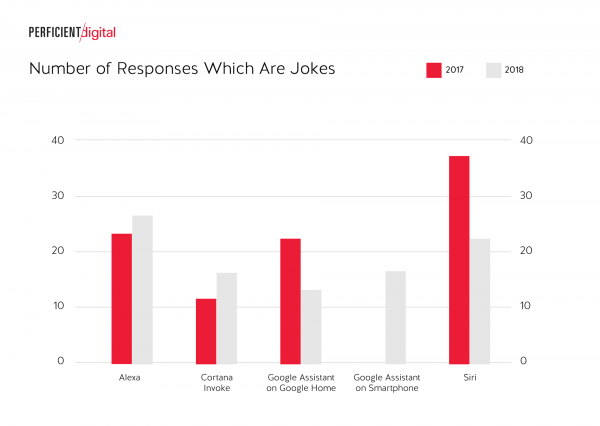

Which Personal Assistant is the Funniest?

All of the personal assistants tell jokes in response to some questions. Here’s a summary of how many we encountered in our 4,942 query test:

Siri used to be the leader here, but Alexa takes that crown in 2018 (based on our limited test). Here are some examples of jokes we found in our 2018 test, starting with Alexa:

Here is one from Siri:

Summary

Google Assistant running on a Smartphone remains the leader in number of questions answered, and in answering questions fully and correctly. Cortana came in a close second for answering questions fully and correctly. Alexa made the most progress by far in closing the gap from the 2017 results, increasing the number of questions answered by 2.7 times. Siri also made material improvements. There is no doubt that this space is seriously heating up.

One major area not covered in this test is the overall connectivity of each personal assistant with other apps and services. This is an incredibly important part of rating a personal assistant as well. You can expect all four companies to be pressing hard to connect to as many quality apps and service providers as possible, as this will have a major bearing on how effective they all are.

Disclosure: This author has multiple Google Home and Amazon Echo devices at home. In addition, Perficient Digital has built both an Amazon Skill and an Actions on Google app to answer SEO questions. The author also own an iPhone and a Pixel 2.